Path Planning with Kinodynamic Constraints

Problem Statement

This project focuses on a real-time trajectory planner for drones operating in unknown environments under kinodynamic constraints. The primary aim is to develop an intelligent navigation system for drones that can operate autonomously in environments that are not known beforehand. The research specifically addresses the challenge of incorporating kinodynamic constraints considering both kinematic limitations and dynamic constraints. The emphasis on real-time computation is crucial because decisions must be made instantaneously during flight and path adjustments need to occur immediately when new obstacles are detected. The primary research question to be investigated in this study is:

How can drones effectively navigate and avoid obstacles in real-time while operating in completely unknown environments?

Implementation

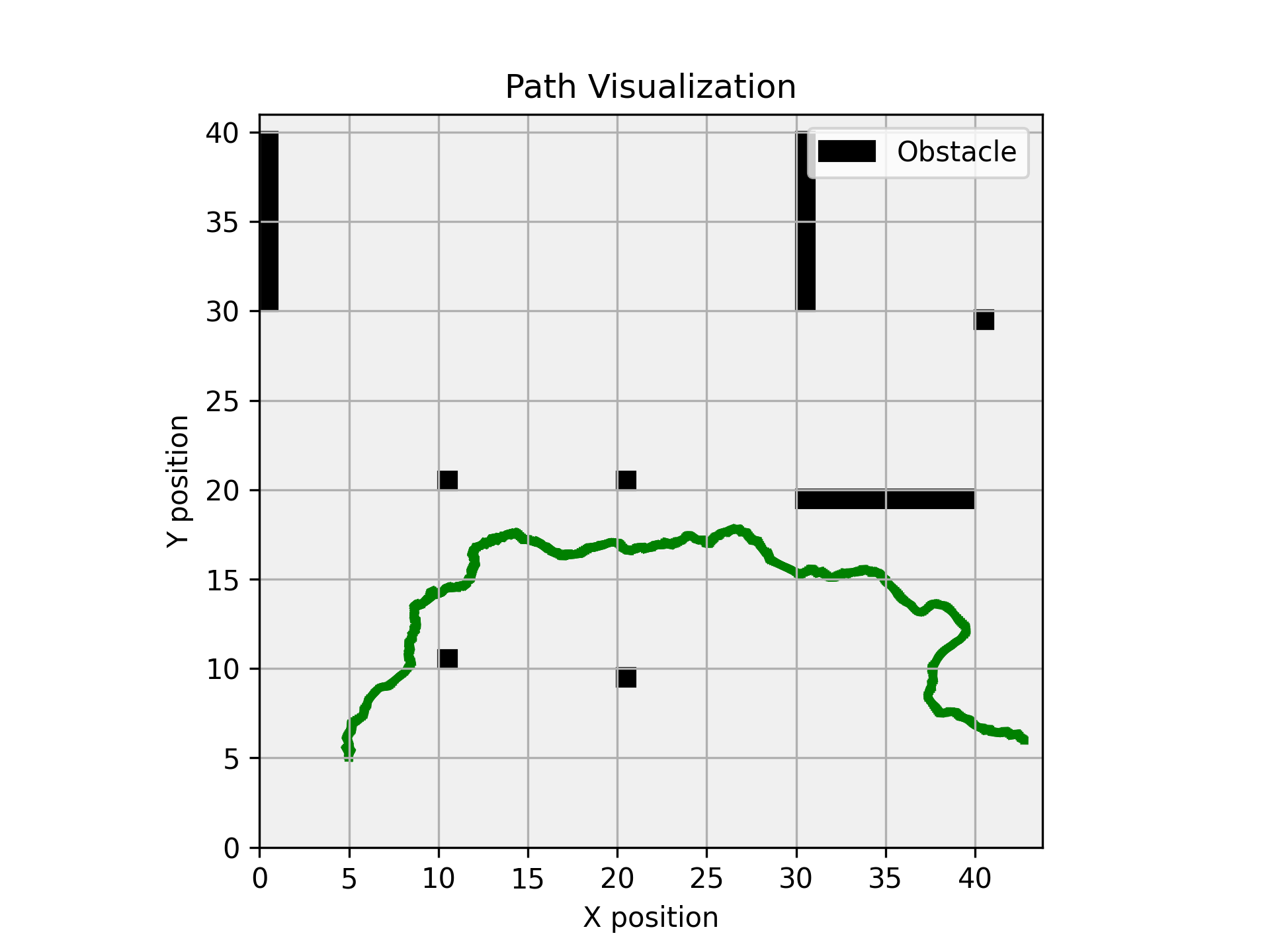

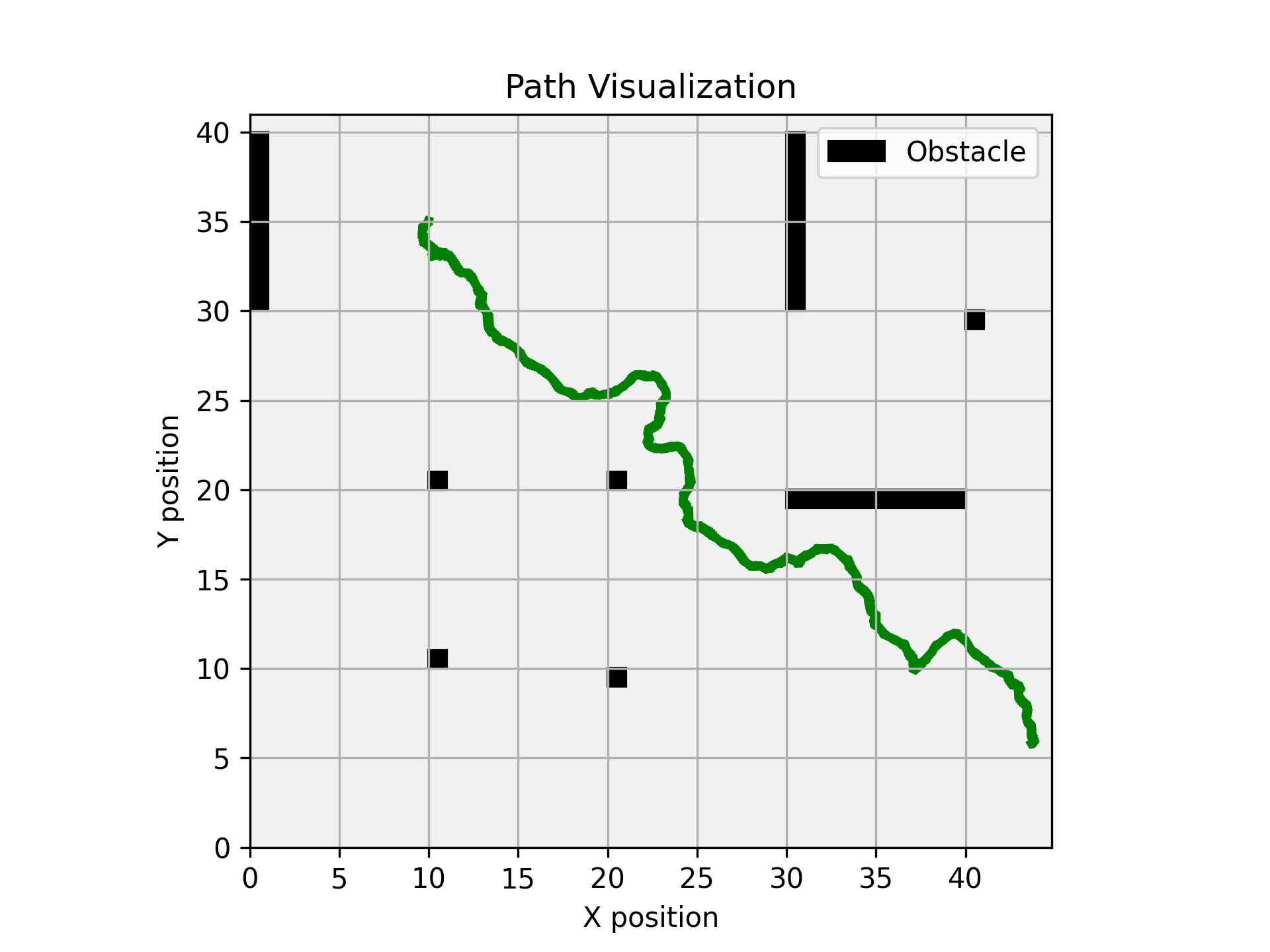

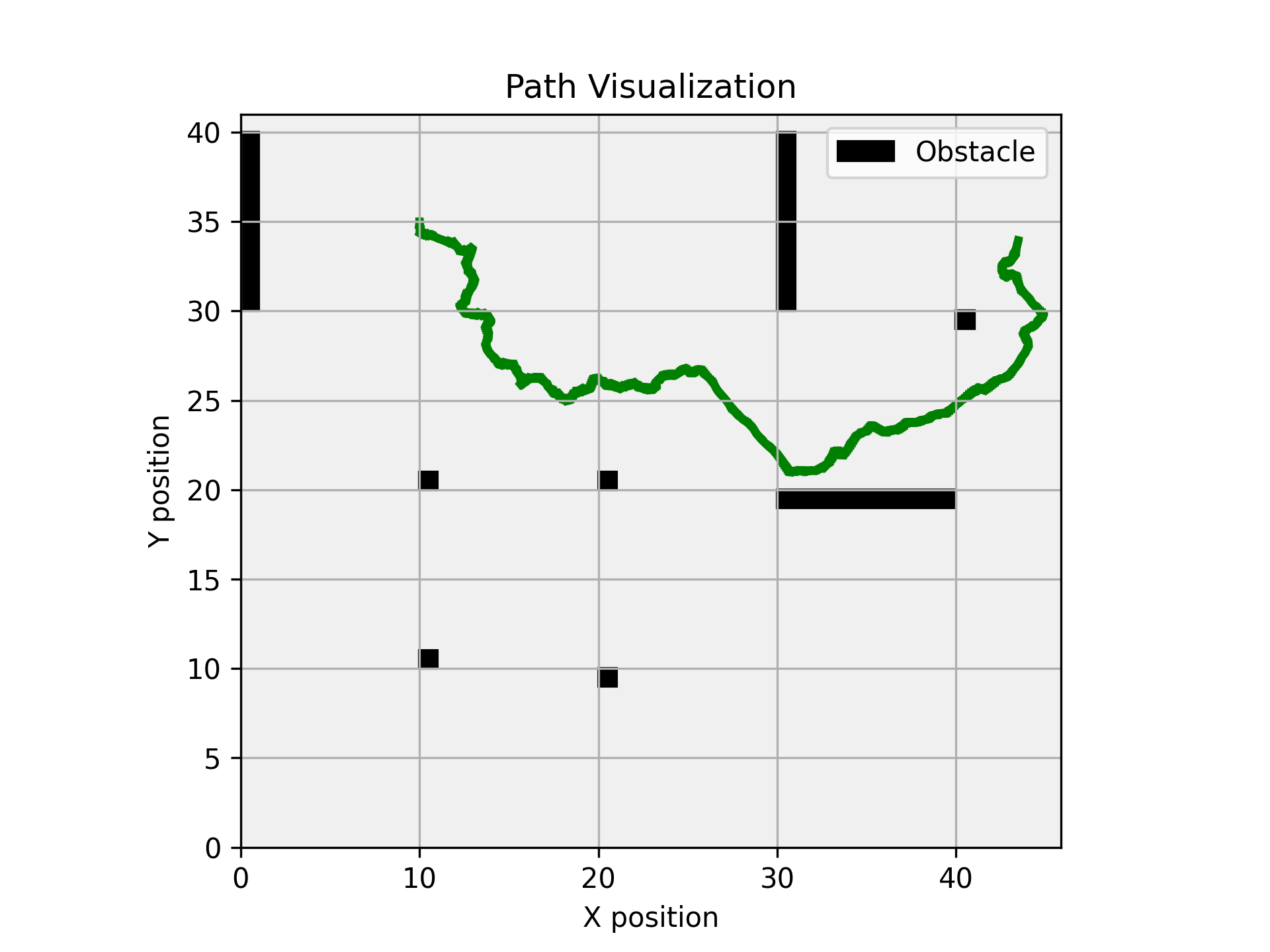

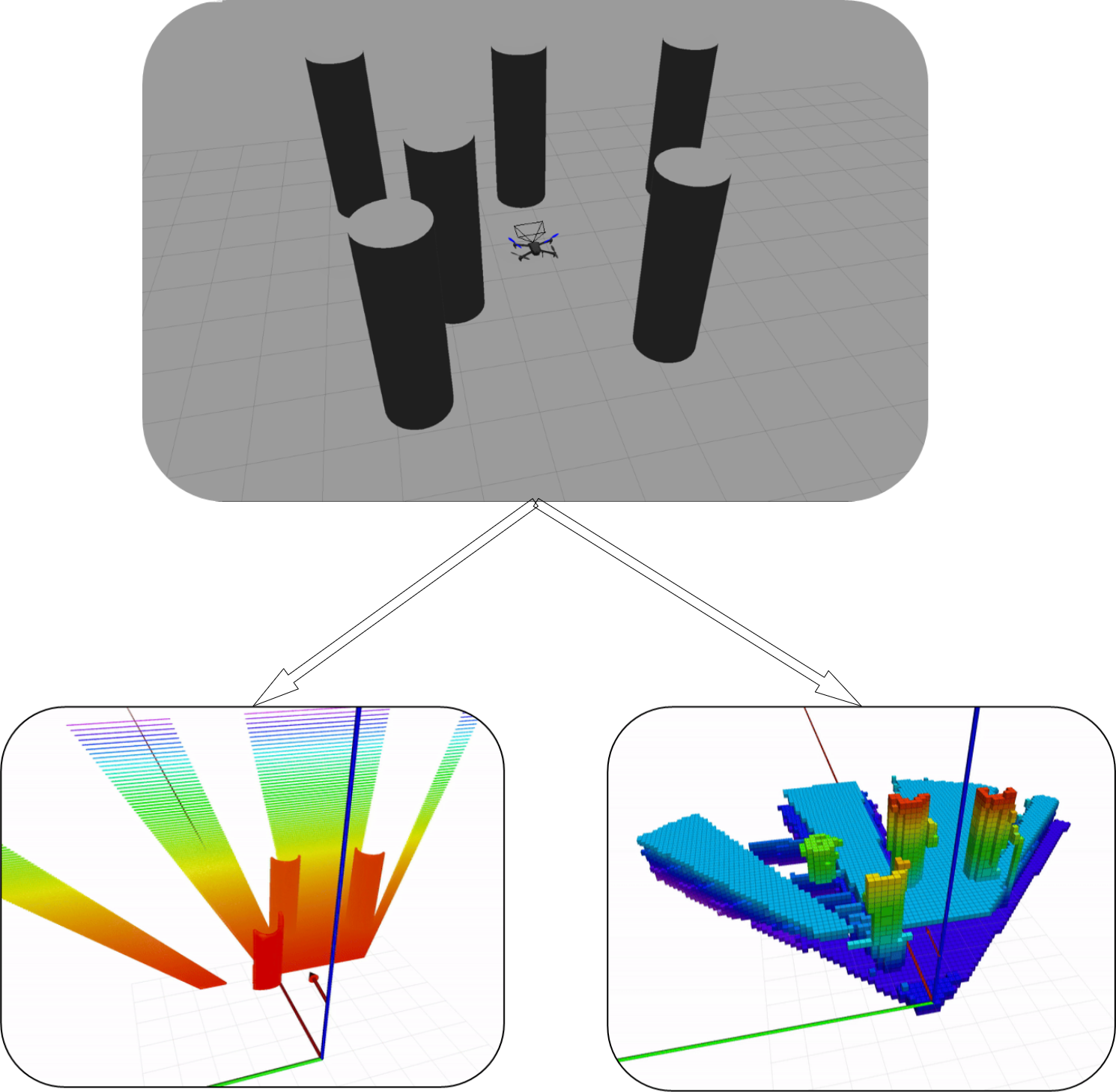

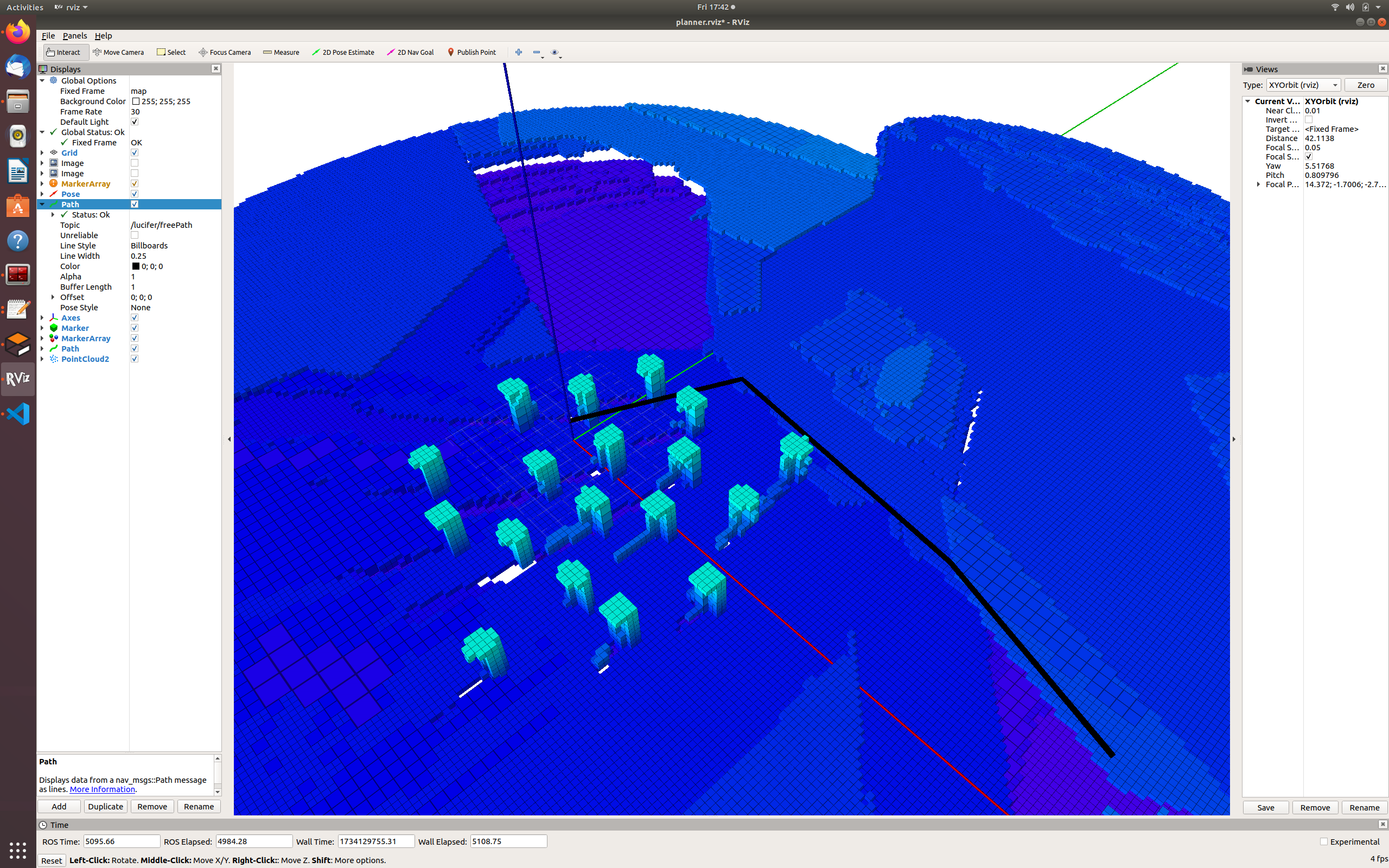

Our framework implements a kinodynamically-constrained RRT planner that synthesizes feasible trajectories by incorporating the drone’s dynamic and kinematic constraints during path computation from start to goal states. While operating in the exploration phase, our system employs the tree-based RRT planner since it provides efficient sampling-based exploration of configuration space, enables real-time path computation, and naturally incorporates both kinodynamic constraints and information gain metrics for unknown environment navigation. Our implementation of the planner consists of an addition to the original RRT planner. Since we can only see the obstacles upto a certain range from the drone, we cannot plan the complete trajectory in one shot. To achieve a trajectory from start to goal, we compute the sub-paths till the visible region and append these to create the complete path. At the initial configuration, the drone plans the complete path from start to goal. However, since it can only see upto the visible region, we extract the sub-path from the planned trajectory and prune the rest of the path. The last node in the visible region now becomes our new start position and we again plan the trajectory from the new start position to the goal position. We repeat the pruning process and re-assigning of the start position until our goal is inside the visible region. Every iteration of this adds a sub-path to our global path of the initial start and goal conditions.

OMPL Environment

The 2D and 3D environment was designed as a simple workspace where planning tasks relied solely on the OMPL framework. It served as a baseline to evaluate the planner’s ability to generate kinodynamically feasible trajectories without the added complexity of integrating mapping and collision detection libraries.

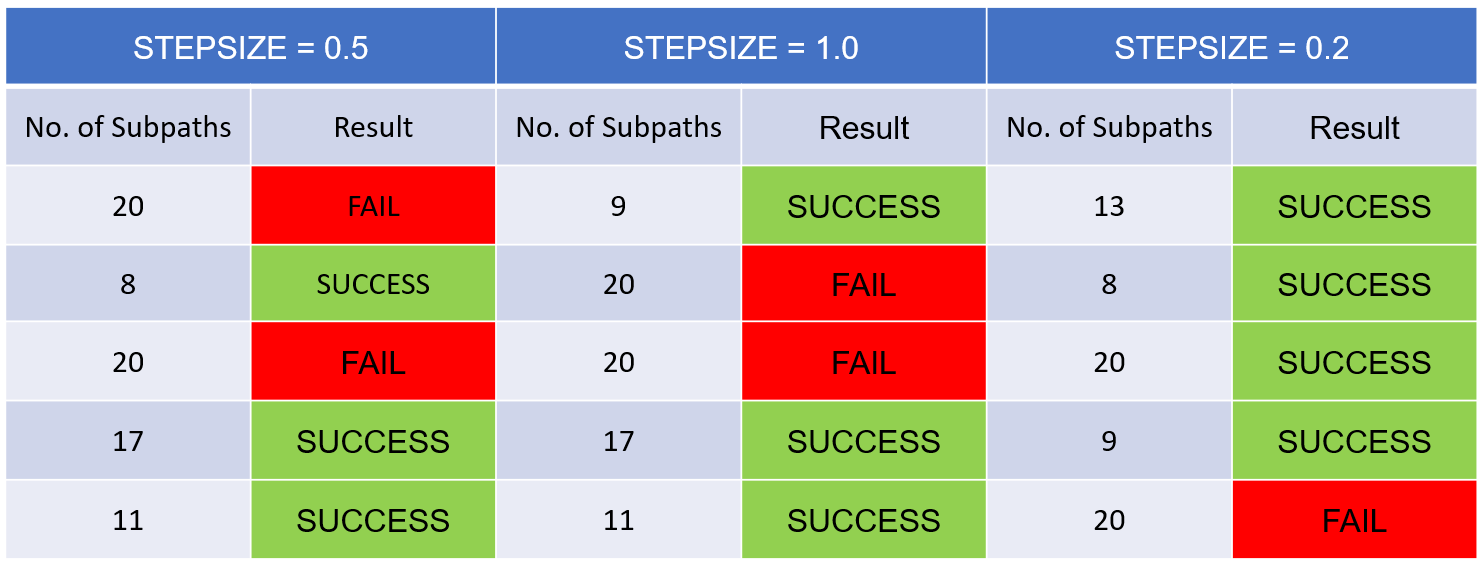

• Evaluations were performed on the 2D planner, taking three different step sizes, where the planner was run 5 times for each condition.

• It was observed that lower step sizes resulted in higher probability of finding the path, where the success rate was defined on number of sub-paths planned for the complete path.

• If the number of sub-paths crossed 20 and the planner failed to reach the goal region then the result of the planner was indicated as FAIL else it was indicated as SUCCESS.

Gazebo Environment

The second environment was more complex, featuring a group of pillars represented within an OctoMap framework. These pillars were modeled as obstacles, providing a realistic and cluttered environment for testing. The OctoMap provided a hierarchical representation of the 3D space, while the EDT3D library enabled precise local collision detection by computing the distances to obstacles within a defined local window around the drone.

Challenges and Results

The integration of OctoMap and EDT3D posed additional computational challenges due to the high demands of processing large maps and performing real-time collision detection. However, the offline setup allowed the planner to utilize the pre-generated map efficiently. The results demonstrated:

• Accuracy: The planner effectively avoided all obstacles in the environment in its successful run, showcasing its robust collision avoidance capabilities.

• Scalability: The modular design of the planner enabled it to handle both simpler OMPL-only scenarios and more complex OctoMap-based environments with ease.

• Flexibility: The planner adapted seamlessly to varied start and goal configurations while maintaining smooth, kinodynamically feasible trajectories.

Platform and Evaluation

Tools and Platforms used on the project are as follows:

Limitations of the Project

• Since the main focus of the project is planning of the motion of the drone, the project would be tested and targeted for a simulation environment and not a real world environment.

• Our system would not be as fast as RL based approaches since the sequential nature of our mapping-then-planning architecture introduces additional processing time compared to RL-based methods which learn to map and plan concurrently.

• The approach followed in this paper is meant for static environments and is not very suitable for dynamic scenes.